Key Concepts

After completing this chapter, you will be able to

- Describe the nature of science and its usefulness in explaining the natural world.

- Distinguish among facts, hypotheses, and theories.

- Outline the methodology of science, including the importance of tests designed to disprove hypotheses.

- Discuss the importance of uncertainty in many scientific predictions, and the relevance of this to environmental controversies.

The Nature of Science

Science can be defined as the systematic examination of the structure and functioning of the natural world, including both its physical and biological attributes. Science is also a rapidly expanding body of knowledge, whose ultimate goal is to discover the simplest general principles that can explain the enormous complexity of nature. These principles can be used to gain insights about the natural world and to make predictions about future change.

Science is a relatively recent way of learning about natural phenomena, having largely replaced the influences of less objective methods and world views. The major alternatives to science are belief systems that are influential in all cultures, including those based on religion, morality, and aesthetics. These belief systems are primarily directed toward different ends than science, such as finding meaning that transcends mere existence, learning how people ought to behave, and understanding the value of artistic expression.

Modern science evolved from a way of learning called natural philosophy, which was developed by classical Greeks and was concerned with the rational investigation of existence, knowledge, and phenomena. Compared with modern science, however, studies in natural philosophy used unsophisticated technologies and methods and were not particularly quantitative, sometimes involving only the application of logic.

Modern science began with the systematic investigations of famous 16th- and 17th-century scientists, such as:

- Nicolaus Copernicus (1473-1543), a Polish astronomer who conceived the modern theory of the solar system

- William Gilbert (1544-1603), an Englishman who worked on magnetism

- Galileo Galilei (1564-1642), an Italian who conducted research on the physics of objects in motion, as well as astronomy

- William Harvey (1578-1657), an Englishman who described the circulation of the blood

- Isaac Newton (1642-1727), an Englishman who made important contributions to understanding gravity and the nature of light, formulated laws of motion, and developed the mathematics of calculus

Inductive and Deductive Logic

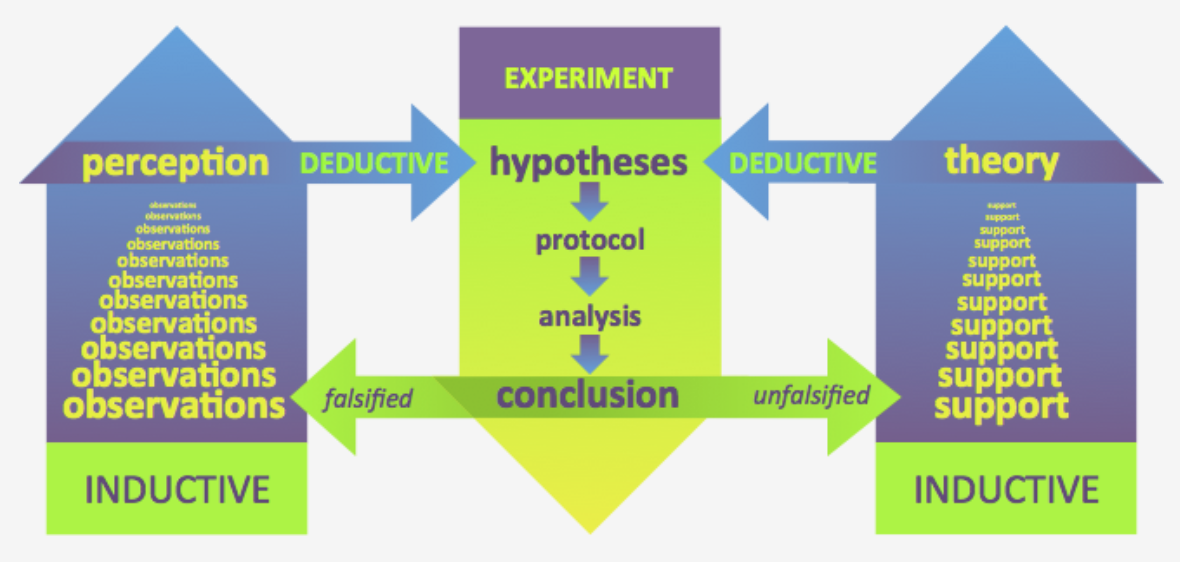

The English philosopher Francis Bacon (1561-1626) was also highly influential in the development of modern science. Bacon was not an actual practitioner of science but was a strong proponent of its emerging methodologies. He promoted the application of inductive logic, in which conclusions are developed from the accumulating evidence of experience and the results of experiments. Inductive logic can lead to unifying explanations based on large bodies of data and observations of phenomena (Figure 2.1). Consider the following example of inductive logic, applied to an environmental topic:

- Observation 1: Marine mammals off the Atlantic coast of North America have large residues of DDT and other chlorinated hydrocarbons in their fat and other body tissues.

- Observation 2: So do marine mammals off the Pacific coast.

- Observation 3: As do those in the Arctic Ocean, although in lower concentrations.

Inductive conclusion: There is a widespread contamination of marine mammals with chlorinated hydrocarbons. Further research may demonstrate that contamination is a global phenomenon. This suggests a potentially important environmental problem.

In contrast, deductive logic involves making one or more initial assumptions and then drawing logical conclusions from those premises. Consequently, the truth of a deductive conclusion depends on the veracity of the original assumptions. If those suppositions are based on false information or on incorrect supernatural belief, then any deduced conclusions are likely to be wrong. Consider the following example of deductive logic:

- Assumption 1: TCDD, an extremely toxic chemical in the dioxin family, is poisonous when present in even the smallest concentrations in food and water—even a single molecule can cause toxicity.

- Assumption 2: Exposure to anything that is poisonous in even the smallest concentrations is unsafe.

- Assumption 3: No exposure that is unsafe should be allowed.

Deductive conclusion 1: No exposure to TCDD is safe.

Deductive conclusion 2: No emissions of TCDD should be allowed.

The two conclusions are consistent with the original assumptions. However, there is disagreement among highly qualified scientists about those assumptions. Many toxicologists believe that exposures to TCDD (and any other potentially toxic chemicals) must exceed a threshold of biological tolerance before poisoning will result (see Chapter 19). In contrast, other scientists believe that even the smallest exposure to TCDD carries some degree of toxic risk. Thus, the strength of deductive logic depends on the acceptance and truth of the original assumptions from which its conclusions flow.

In general, inductive logic plays a much stronger role in modern science than does deductive logic. In both cases, however, the usefulness of any conclusions depends greatly on the accuracy of any observations and other data on which they were based. Poor data may lead to an inaccurate conclusion through the application of inductive logic, as will inappropriate assumptions in deductive logic.

Figure 2.1 Deductive and Inductive Reasoning in Science. Making sense of the natural world begins with observations. Left) As we collect observations of the world, we can begin to make general predictions (or perceptions) regarding phenomena. This process is known as inductive reasoning, making general predictions from specific phenomena. From these generalized perceptions of reality, specific predictions can be deduced using logic, generating hypotheses. Middle) Experimentation allows researchers to test the predictions of the hypotheses. If a hypothesis is falsified, that is another observation which adds to our general perception of reality. Right) As more and more similar but different experiments reinforce a specific prediction, growing support emerges for the development of a scientific theory, another example of inductive reasoning. In turn, a theory can assist in the development of additional, untested hypotheses using deductive reasoning. Source: used with permission from Jason Walker, The Biology Primer.

Goals of Science

The broad goals of science are to understand natural phenomena and to explain how they may be changing over time. To achieve those goals, scientists undertake investigations that are based on information, inferences, and conclusions developed through a systematic application of logic, usually of the inductive sort. As such, scientists carefully observe natural phenomena and conduct experiments.

A higher goal of scientific research is to formulate laws that describe the workings of the universe in general terms. (For example, see Chapter 3 for a description of the laws of thermodynamics, which deal with the transformations of energy among its various states.) Universal laws, along with theories and hypotheses (see below), are used to understand and explain natural phenomena. However, many natural phenomena are extremely complex and may never be fully understood in terms of physical laws. This is particularly true of the ways that organisms and ecosystems are organized and function.

Scientific investigations may be pure or applied. Pure science is driven by intellectual curiosity – it is the unfettered search for knowledge and understanding, without regard for its usefulness in human welfare. Applied science is more goal-oriented and deals with practical difficulties and problems of one sort or another. Applied science might examine how to improve technology, or to advance the management of natural resources, or to reduce pollution or other environmental damages associated with human activities.

Facts, Hypotheses, and Experiments

A fact is an event or thing that is definitely known to have happened, to exist, and to be true. Facts are based on experience and scientific evidence. In contrast, a hypothesis is a proposed explanation for the occurrence of a phenomenon. Scientists formulate hypotheses as statements and then test them through experiments and other forms of research. Hypotheses are developed using logic, inference, and mathematical arguments in order to explain observed phenomena. However, it must always be possible to refute a scientific hypothesis. Thus, the hypothesis that “cats are so intelligent that they prevent humans from discovering it” cannot be logically refuted, and so it is not a scientific hypothesis.

A theory is a broader conception that refers to a set of explanations, rules, and laws. These are supported by a large body of observational and experimental evidence, all leading to robust conclusions. The following are some of the most famous theories in science:

- The theory of gravitation, first proposed by Isaac Newton (1642-1727)

- The theory of evolution by natural selection, published simultaneously in 1858 by two English naturalists, Charles Darwin (1809-1882) and Alfred Russel Wallace (1823-1913)

- The theory of relativity, identified by the German–Swiss physicist, Albert Einstein (1879-1955)

Celebrated theories like these are strongly supported by large bodies of evidence, and they will likely persist for a long time. However, we cannot say that these (or any other) theories are known with certainty to be true – some future experiments may yet falsify even these famous theories.

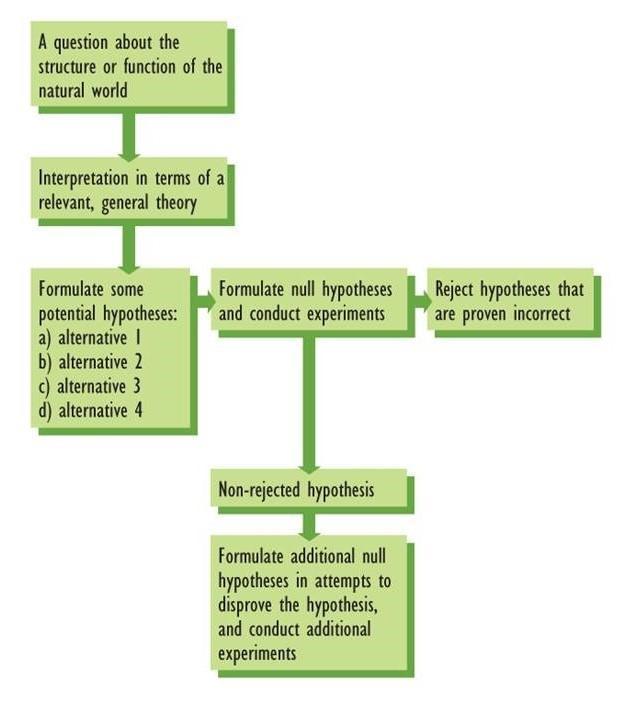

The scientific method begins with the identification of a question involving the structure or function of the natural world, which is usually developed using inductive logic (Figure 2.2). The question is interpreted in terms of existing theory, and specific hypotheses are formulated to explain the character and causes of the natural phenomenon. The research might involve observations made in nature, or carefully controlled experiments, and the results usually give scientists reasons to reject hypotheses rather than to accept them. Most hypotheses are rejected because their predictions are not borne out during the course of research. Any viable hypotheses are further examined through additional research, again largely involving experiments designed to disprove their predictions. Once a large body of evidence accumulates in support of a hypothesis, it can be used to corroborate the original theory.

Figure 2.2. Diagrammatic Representation of the Scientific Method. The scientific method starts with a question, relates that question to a theory, formulates a hypothesis, and then rigorously tests that hypothesis. Source: Modified from Raven and Johnson (1992).

The scientific method is only used to investigate questions that can be critically examined through observation and experiment. Consequently, science cannot resolve value-laden questions, such as the meaning of life, good versus evil, or the existence and qualities of God or any other supernatural being or force.

An experiment is a test or investigation that is designed to provide evidence in support of, or preferably against, a hypothesis. A natural experiment is conducted by observing actual variations of phenomena in nature, and then developing explanations by analysis of possible causal mechanisms. A manipulative experiment involves the deliberate alteration of factors that are hypothesized to influence phenomena. The manipulations are carefully planned and controlled in order to determine whether predicted responses will occur, thereby uncovering causal relationships.

By far the most useful working hypotheses in scientific research are designed to disprove rather than support. A null hypothesis is a specific testable investigation that denies something implied by the main hypothesis being studied. Unless null hypotheses are eliminated on the basis of contrary evidence, we cannot be confident of the main hypothesis.

This is an important aspect of scientific investigation. For instance, a particular hypothesis might be supported by many confirming experiments or observations. This does not, however, serve to “prove” the hypothesis – rather, it only supports its conditional acceptance. As soon as a clearly defined hypothesis is falsified by an appropriately designed and well-conducted experiment, it is disproved for all time. This is why experiments designed to disprove hypotheses are a key aspect of the scientific method.

Revolutionary advances in understanding may occur when an important hypothesis or theory are rejected through discoveries of science. For instance, once it was discovered that the Earth is not flat, it became possible to confidently sail beyond the visible horizon without fear of falling off the edge of the world. Another example involved the discovery by Copernicus that the planets of our solar system revolve around the Sun, and the related concept that the Sun is an ordinary star among many – these revolutionary ideas replaced the previously dominant one that the planets, Sun, and stars all revolved around the Earth.

Thomas Kuhn (1922-1995) was a philosopher of science who emphasized the important role of “scientific revolutions” in achieving great advances in our understanding of the natural world. In essence, Kuhn (1996) said that a scientific revolution occurs when a well-established theory is rigorously tested and then collapses under the accumulating weight of new facts and observations that cannot be explained. This renders the original theory obsolete, to be replaced by a new, more informed paradigm (i.e., a set of assumptions, concepts, practices, and values that constitutes a way of viewing reality and is shared by an intellectual community).

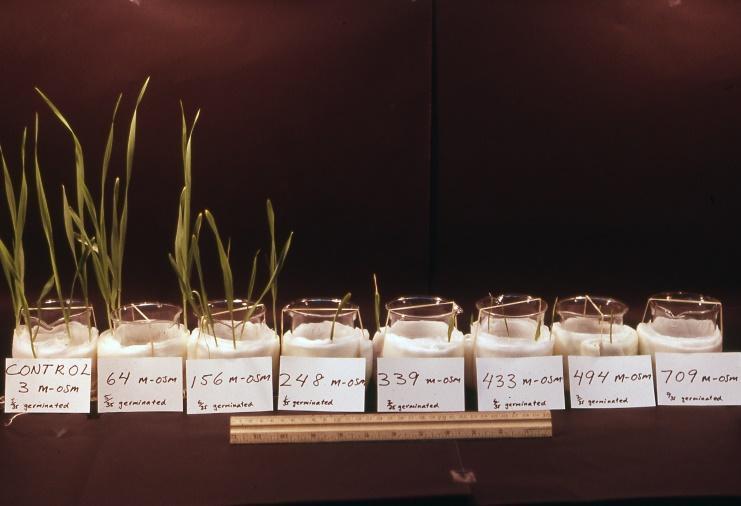

A variable is a factor that is believed to influence a natural phenomenon. For example, a scientist might hypothesize that the productivity of a wheat crop is potentially limited by such variables as the availability of water or of nutrients such as nitrogen and phosphorus. Some of the most powerful scientific experiments involve the manipulation of key (or controlling) variables and the comparison of results of those treatments with a control that was not manipulated. In the example just described, the specific variable that controls wheat productivity could be identified by conducting an experiment in which test populations are provided with varying amounts of water, nitrogen, and phosphorus, alone and in combination, and then comparing the results with a non-manipulated control.

In some respects, however, the explanation of the scientific method offered above is a bit uncritical. It perhaps suggests a too-orderly progression in terms of logical, objective experimentation and comparison of alternative hypotheses. These are, in fact, important components of the scientific method. Nevertheless, it is important to understand that the insights and personal biases of scientists are also significant in the conduct and progress of science. In most cases, scientists design research that they think will “work” to yield useful results and contribute to the orderly advancement of knowledge in their field. Karl Popper (1902-1994), a European philosopher, noted that scientists tend to use their “imaginative preconception” of the workings of the natural world to design experiments based on their informed insights. This means that effective scientists must be more than knowledgeable and technically skilled – they should also be capable of a degree of insightful creativity when forming their ideas, hypotheses, and research.

Image 2.1. An experiment is a controlled investigation designed to provide evidence for, or preferably against, a hypothesis about the working of the natural world. This laboratory experiment exposed test populations of a grass to different concentrations of a toxic chemical. B. Freeman.

Uncertainty

Much scientific investigation involves the collection of observations by measuring phenomena in the natural world. Another important aspect of science involves making predictions about the future values of variables. Such projections require a degree of understanding of the relationships among variables and their influencing factors, and of recent patterns of change. However, many kinds of scientific information and predictions are subject to inaccuracy. This occurs because measured data are often approximations of the true values of phenomena, and predictions are rarely fulfilled exactly. The accuracy of observations and predictions is influenced by various factors, especially those described in the following sections.

Predictability

A few phenomena are considered to have a universal character and are consistent wherever and whenever they are accurately measured. One of the best examples of such a universal constant is the speed of light, which always has a value of 2.998 × 108 meters per second, regardless of where it is measured or of the speed of the body from which the light is emitted. Similarly, certain relationships describing transformations of energy and matter, known as the laws of thermodynamics (Chapter 3), always give reliable predictions.

However, most natural phenomena are not so consistent – depending on circumstances, there are exceptions to general predictions about them. This circumstance is particularly true of biology and ecology, related fields of science in which almost all general predictions have exceptions. In fact, laws or unifying principles of biology or ecology have not yet been discovered, in contrast to the several esteemed laws and 11 universal constants of physics. For this reason, biologists and ecologists have great difficulties making accurate predictions about the responses of organisms and ecosystems to environmental change. This is why biologists and ecologists are sometimes said to have “physics envy.”

In large part, the inaccuracies of biology and ecology occur because key functions are controlled by poorly understood, and sometimes unidentified, environmental influences. Consequently, predictions about future values of biological and ecological variables or the causes of changes are seldom accurate. For example, even though ecologists in North America have been monitoring the population size of spruce budworm (an important pest of conifer forests) for some years, they cannot accurately predict its future abundance in particular stands of forest or in larger regions. This is because the abundance of this moth is influenced by a variety of environmental factors, including tree-species composition, age of the forest, abundance of its predators and parasites, quantities of its preferred foods, weather at critical times of year, and insecticide use to reduce its populations (see Chapter 26). Biologists and ecologists do not fully understand this complexity, and perhaps they never will.

Variability

Many natural phenomena are highly variable in space and time. This is true of physical and chemical variables as well as of biological and ecological ones. Within a forest, for example, the amount of sunlight reaching the ground varies greatly with time, depending on the hour of the day and the season of the year. It also varies spatially, depending on the density of foliage over any place where sunlight is being measured. Similarly, the density of a particular species of fish within a river typically varies in response to changes in habitat conditions and other influences. Most fish populations also vary over time, especially migratory species such as salmon. In environmental science, replicated (or independently repeated) measurements and statistical analyses are used to measure and account for these kinds of temporal and spatial variations.

Accuracy and Precision

Accuracy refers to the degree to which a measurement or observation reflects the actual, or true, value of the subject. For example, the insecticide DDT and the metal mercury are potentially toxic chemicals that occur in trace concentrations in all organisms, but their small residues are difficult to analyze chemically. Some of the analytical methods used to determine the concentrations of DDT and mercury are more accurate than others and therefore provide relatively useful and reliable data compared with less accurate methods.

Precision is related to the degree of repeatability of a measurement or observation. For example, suppose that the actual number of caribou in a migrating herd is 10,246 animals. A wildlife ecologist might estimate that there were about 10,000 animals in that herd, which for practical purposes is a reasonably accurate reckoning of the actual number of caribou. If other ecologists also independently estimate the size of the herd at about 10,000 caribou, there is a good degree of precision among the values. If, however, some systematic bias existed in the methodology used to count the herd, giving consistent estimates of 15,000 animals (remember, the actual population is 10,246 caribou), these estimates would be considered precise, but not particularly accurate.

Precision is also related to the number of digits with which data are reported. If you were using a flexible tape to measure the lengths of 10 large, wriggly snakes, you would probably measure the reptiles only to the nearest centimeter. The strength and squirminess of the animals make more precise measurements impossible. The reported average length of the 10 snakes should reflect the original measurements and might be given as 204 cm and not a value such as 203.8759 cm. The latter number might be displayed as a digital average by a calculator or computer, but it is unrealistically precise.

Significant figures are related to accuracy and precision and can be defined as the number of digits used to report data from analyses or calculations. Significant figures are most easily understood by examples. The number 179 has three significant figures, as does the number 0.0849 and also 0.000794 (the zeros preceding the significant integers do not count). However, the number 195,000,000 has nine significant figures (the zeros following are meaningful), although the number 195 × 106 has only three significant figures.

It is rarely useful to report environmental or ecological data to more than 2-4 significant figures. This is because any more would generally exceed the accuracy and precision of the methodology used in the estimation and would therefore be unrealistic. For example, the approximate population of the United States in 2020 was 330 million people, which uses three significant figures. However, the population should not be reported as 330,000,000, which implies an unrealistic accuracy and precision of nine significant figures.

A Need for Skepticism

Environmental science is filled with many examples of uncertainty– in present values and future changes of environmental variables, as well as in predictions of biological and ecological responses to those changes. To some degree, the difficulties associated with scientific uncertainty can be mitigated by developing improved methods and technologies for analysis and by modelling and examining changes occurring in different parts of the world. The latter approach enhances our understanding by providing convergent evidence about the occurrence and causes of natural phenomena.

However, scientific information and understanding will always be subject to some degree of uncertainty. Therefore, predictions will always be inaccurate to some extent, and this uncertainty must be considered when trying to understand and deal with the causes and consequences of environmental changes. As such, all information and predictions in environmental science must be critically interpreted with uncertainty in mind (In Detail 2.1). This should be done whenever one is learning about an environmental issue, whether it involves listening to a speaker in a classroom, at a conference, or on video, or when reading an article in a newspaper, textbook, website, or scientific journal.

Environmental issues are acutely important to the welfare of people and other species. Science and its methods allow for a critical and objective identification of key issues, the investigation of their causes, and a degree of understanding of the consequences of environmental change. Scientific information influences decision making about environmental issues, including whether to pursue expensive strategies to avoid further, but often uncertain, damage.

Scientific information is, however, only one consideration for decision makers, who are also concerned with the economic, cultural, and political contexts of environmental problems (see Environmental Issues 1.1). In fact, when deciding how to deal with the causes and consequences of environmental changes, decision makers may give greater weight to non-scientific (social and economic) considerations than to scientific ones, especially when there is uncertainty about the latter. The most important decisions about environmental issues are made by politicians and senior bureaucrats in government, or by private managers, rather than by environmental scientists. Decision makers typically worry about the short-term implications of their decisions on their chances for re-election or continued employment, and on the economic activity of a company or society at large, as much as they do about the consequences of environmental damage.

In Detail 2.1. Critical Evaluation of an Overload of Information

More so than any previous society, we live today in a world of easy and abundant information. It has become remarkably easy for people to communicate with others over vast distances, turning the world into a “global village” (a phrase coined by Marshall McLuhan (1911-1980), a Canadian philosopher, to describe the phenomenon of universal networking). This global connectedness has been facilitated by technologies for transferring ideas and knowledge—particularly electronic communication devices, such as radio, television, computers, and their networks. Today, these technologies compress space and time to achieve a virtually instantaneous communication. In fact, so much information is now available that the situation is often referred to as an “information overload” that must be analyzed critically. Critical analysis is the process of sorting information and making scientific enquiries about data. Involved in all aspects of the scientific process, critical analysis scrutinizes information and research by posing sensible questions such as the following:

- Is the information derived from a scientific framework consisting of a hypothesis that has been developed and tested, within the context of an existing body of knowledge and theory in the field?

- Were the methodologies used likely to provide data that are objective, accurate, and precise? Were the data analyzed by statistical methods that are appropriate to the data structure and to the questions being asked?

- Were the results of the research compared with other pertinent work that has been previously published? Were key similarities and differences discussed and a conclusion deduced about what the new work reveals about the issue being investigated?

- Is the information based on research published in a refereed journal—one that requires highly qualified reviewers in the subject area to scrutinize the work, followed by an editorial decision about whether it warrants publication?

- If the analysis of an issue was based on incomplete or possibly inaccurate information, was a precautionary approach used in order to accommodate the uncertainty inherent in the recommendations? All users of published research have an obligation to critically evaluate what they are reading in these ways in order to decide whether the theory is appropriate, the methodologies reliable, and the conclusions sufficiently robust. Because so many environmental issues are controversial, with data and information presented on both sides of the debate, people need to be able to formulate objectively critical judgments. For this reason, people need a high degree of environmental literacy– an informed understanding of the causes and consequences of environmental damages. Being able to critically analyze information is a key personal benefit of studying environmental science.

Conclusions

The procedures and methods of science are important in identifying, understanding, and resolving environmental problems. At the same time, however, social and economic issues are also vital considerations. Although science has made tremendous progress in helping us to understand the natural world, the extreme complexity of biology and ecosystems makes it difficult for environmental scientists to make reliable predictions about the consequences of many human economic activities and other influences. This context underscores the need for continued study of the scientific and socio-economic dimensions of environmental problems, even while practical decisions must be made to deal with obvious issues as they arise.

Questions for Review

- Outline the reasons why science is a rational way of understanding the natural world.

- What are the differences between inductive and deductive logic? Why is inductive logic more often used by scientists when formulating hypotheses and generalizations about the natural world?

- Why are null hypotheses an efficient way to conduct scientific research? Identify a hypothesis that is suitable for examining a specific problem in environmental science and suggest a corresponding null hypothesis that could be examined through research.

- What are the causes of variation in natural phenomena? Choose an example, such as differences in the body weights of a defined group of people, and suggest reasons for the variation.

Questions for Discussion

- What are the key differences between science and a less objective belief system, such as religion?

- What factors result in scientific controversies about environmental issues? Contrast these with environmental controversies that exist because of differing values and world views.

- Explain why there are no scientific “laws” to explain the structure and function of ecosystems.

- Many natural phenomena are highly variable, particularly ones that are biological or ecological. What are the implications of this variability for understanding and predicting the causes and consequences of environmental changes? How do environmental scientists cope with this challenge of a variable natural world?

Exploring Issues

- Devise an environmental question of interest to yourself. Suggest useful hypotheses to investigate, identify the null hypotheses, and outline experiments that you might conduct to provide answers to this question.

- During a research project investigating mercury, an environmental scientist performed a series of chemical analyses of fish caught in Lake Erie. The sampling program involved seven species of fish obtained from various habitats within the lake. A total of 360 fish of various sizes and sexes were analyzed. It was discovered that 30% of the fish had residue levels greater than 0.15 ppm of mercury, the upper level of contamination recommended by the United States Environmental Protection Agency for fish eaten by humans. The scientist reported these results to a governmental regulator, who was alarmed by the high mercury residues because of Lake Erie’s popularity as a place where people fish for food. The regulator asked the scientist to recommend whether it was safe to eat any fish from the lake or whether to avoid only certain sizes, sexes, species, or habitats. What sorts of data analyses should the scientist perform to develop useful recommendations? What other scientific and non-scientific aspects should be considered?

References Cited and Further Reading

American Association for the Advancement of Science (AAAS). 1990. Science for All Americans. AAAS, Washington, DC.

Barnes, B. 1985. About Science. Blackwell Ltd ,London, UK.

Giere, R.N. 2005. Understanding Scientific Reasoning. 5th ed. Wadsworth Publishing, New York, NY.

Kuhn, T.S. 1996. The Structure of Scientific Revolutions. 3rd ed. University of Chicago Press, Chicago, IL.

McCain, G. and E.M. Siegal. 1982. The Game of Science. Holbrook Press Inc., Boston, MA.

Moore, J.A. 1999. Science as a Way of Knowing. Harvard University Press, Boston, MA.

Popper, K. 1979. Objective Knowledge: An Evolutionary Approach. Clarendon Press, Oxford, UK.

Raven, P.H., G.B. Johnson, K.A. Mason, and J. Losos. 2013. Biology. 10th ed. McGraw-Hill, Columbus, OH.

Silver, B.L. 2000. The Ascent of Science. Oxford University Press, Oxford, UK.

Candela Citations

- Environmental Science. Authored by: Bill Freedman. Provided by: Dalhousie University. Located at: https://digitaleditions.library.dal.ca/environmentalscience/. License: CC BY-NC: Attribution-NonCommercial