Learning Outcomes

- Discuss big data and its implications on decision making

Once upon a time, there was a small retailer with big ideas. After about five years, the retailer had approximately twenty locations, and the company decided they wanted to be even larger. This retailer sold a lot of franchise locations, opened a lot of corporate locations, and suddenly found itself with over 400 stores in over thirty states. But they were still operating like a mom-and-pop business: sales were being tallied on spreadsheets and product pricing was like throwing a dart at a bunch of numbers on a wall. Orders in their distribution center were being printed off, and people with carts would wheel around, picking items off a shelf and boxing them up by hand.

A retailer that was growing couldn’t continue to operate that way, so they went out and bought a whole new retail management system that changed everything. From the way merchandise was “picked” in the distribution center to how the customer was handled at the cash register, everything was computerized. Loyalty programs were developed for repeat customers, emails were collected—and all of that data was being collected on the back end, ready to be spit out in a variety of cookie-cutter reports, or even customized ones. Sales information was being collected at the speed of a transaction every couple of seconds, and that was just the start.

When the leadership team went to look at the data they were collecting, when they were ready to make some decisions and wanted some data to help get to the right answers, there was so much data to look at, they didn’t even know where to begin. Where once they had to guess what kind of customer was buying Item A, now they knew her email, her income level, the time of day she normally shopped, how many times a year she bought that item and what other items she chose to buy when she bought that item.

It was enough to make their heads explode.

The term “big data” is used to describe extremely large data sets that may be analyzed computationally to reveal patterns, trends, and associations, especially relating to human behavior and interactions. Often, this data is too large or complex for traditional data-processing application software.

Data can have many rows, or cases of information, but not be terribly complex. Think in terms of the sales of Item A above. We might be able to look at information about the size of the product (did they buy the 10 oz or 22 oz package?), the location at which the sale took place, and the time of day it sold. There might be 700 instances of the sale of that item, so there are 700 rows, or cases, of data.

Now let’s take the information on the customer. We know her age, her income range, and the time of day that she wants to shop. We know the types of products she buys in the store, and what kind of coupons get her to make an extra trip in to shop. We know that she shops primarily at the store on Main Street, but that she sometimes stops in to the store on Pine Street. This is data that has more attributes or columns, and features a higher complexity.

Data with many cases of information is considered to be statistically powerful. Data with more attributes and a higher complexity is more likely to lead to a false discovery rate.

PRactice Question

Challenges with big data include the ability to capture it to begin with, storing such large amounts of data when it’s captured, analyzing it, sharing it, and even keeping the information secure and private.

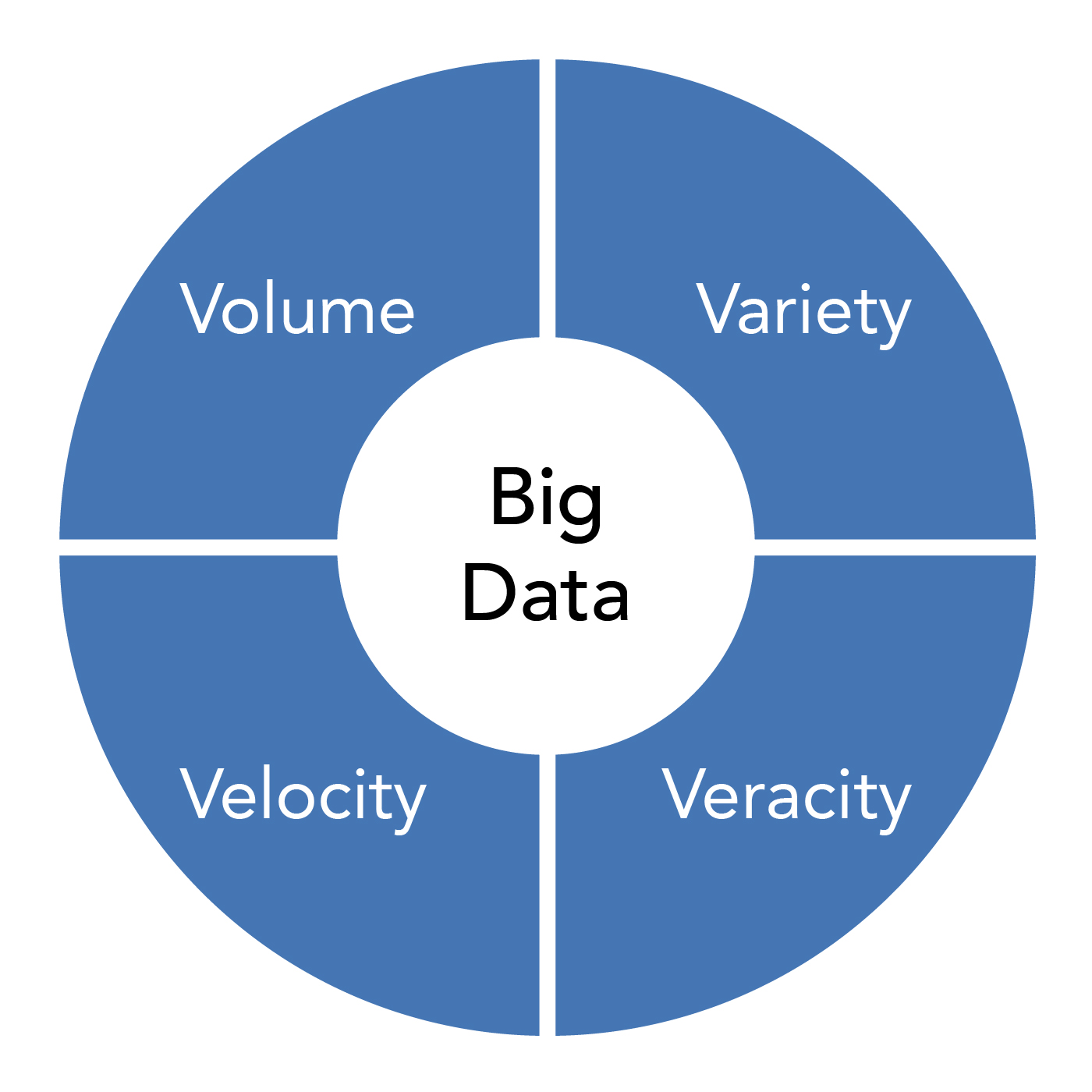

IBM data scientists broke the concept of big data into four pieces: volume, velocity, variety, and veracity.

IBM data scientists broke the concept of big data into four pieces: volume, velocity, variety, and veracity.

- Volume. This refers to the amount of data being collected—and it’s always huge. Just think of this: 90% of all the data in the world was generated in the last two years. Volume is the amount of data being generated and collected—the size of the sample will determine if it’s big data or not. Putting things in perspective, the retail chain Walmart has more than 2.5 petabytes of customer data just from the 1 million+ transactions they handle every hour. That’s 167 times the amount of information held in all the Library of Congress.

- Velocity. This is the speed at which data is generated and made available. A lot of data is available in real time. Two kinds of velocity as they relate to big data are frequency of generation and frequency of handling, recording and publishing.

- Variety. There are two types of data: structured and unstructured. Structured data is the kind you think of when you think “data”—like the date, amount, and time columns on a bank statement. Unstructured data is all of the other data that’s out there—tweets on twitter, your mobile phone’s voice mails, photos, GPS locators. One of the goals of big data has been to take those unstructured types of data and learn how to make sense of them.

- Veracity. This term refers to how accurate the data is. There is inherent discrepancy in all data collected, and a good data analyst will account for those discrepancies or clean up the data. Still, overall, the inaccuracy of data costs companies billions of dollars each year.

Big data was an issue when companies didn’t know how to handle the sheer amount of data being collected and how quickly the information was coming in. Now, there are consultants to help organizations handle and process the data (it’s a $100 billion industry), and companies have learned to adjust and prepare in other ways. Big data can help an organization make very accurate decisions that create a huge impact.

Kroger

The grocery retailer Kroger sent out a magazine with recipes and other food related articles. Each article was about a food their consumer was likely to use, and the insert featured coupons for brands the consumer used. Big data helped Kroger increase customer engagement by informing their customer rewards through accurate couponing. Kroger analyzes the data of 770 million transactions and boasts that 95% of their transactions come with loyalty card use, which ultimately equates to $12 billion in incremental revenue.

REd Roof Inn

The Red Roof Inn looked to big data to help them capitalize on stranded travelers. In the coldest depths of winter, when recreational travel is at its low point of the year in cold weather areas, Red Roof Inn was able to examine historical weather information, historical flight information, and ultimately start targeting marketing efforts to the 90,000 passengers that end up stranded due to winter weather. Big data helped Red Roof determine the areas where their services might come in handy, and pointed them to mobile advertising and other methods to drive digital bookings.

Taming big data definitely has its rewards.

Candela Citations

- Big Data in Decision Making. Authored by: Freedom Learning Group. Provided by: Lumen Learning. License: CC BY: Attribution

- Image: Big Data. Provided by: Lumen Learning. License: CC BY: Attribution

- Untitled. Authored by: Gerd Altmann. Provided by: Pixabay. Located at: https://pixabay.com/illustrations/web-network-programming-3706562/. License: CC0: No Rights Reserved. License Terms: Pixabay License