What you’ll learn to do: define perception and give examples of gestalt principles and multimodal perception

Seeing something is not the same thing as making sense of what you see. Why is it that our senses are so easily fooled? In this section, you will come to see how our perceptions are not infallible, and they can be influenced by bias, prejudice, and other factors. Psychologists are interested in how these false perceptions influence our thoughts and behavior.

Watch this CrashCourse video for a good overview of perception:

Learning Objectives

- Give examples of gestalt principles, including the figure-ground relationship, proximity, similarity, continuity, and closure

- Define the basic terminology and basic principles of multimodal perception

- Give examples of multimodal and crossmodal behavioral effects

Gestalt Principles of Perception

In the early part of the 20th century, Max Wertheimer published a paper demonstrating that individuals perceived motion in rapidly flickering static images—an insight that came to him as he used a child’s toy tachistoscope. Wertheimer, and his assistants Wolfgang Köhler and Kurt Koffka, who later became his partners, believed that perception involved more than simply combining sensory stimuli. This belief led to a new movement within the field of psychology known as Gestalt psychology. The word gestalt literally means form or pattern, but its use reflects the idea that the whole is different from the sum of its parts. In other words, the brain creates a perception that is more than simply the sum of available sensory inputs, and it does so in predictable ways. Gestalt psychologists translated these predictable ways into principles by which we organize sensory information. As a result, Gestalt psychology has been extremely influential in the area of sensation and perception (Rock & Palmer, 1990).

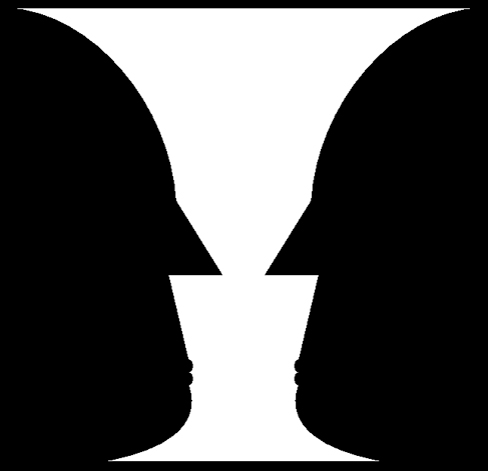

One Gestalt principle is the figure-ground relationship. According to this principle, we tend to segment our visual world into figure and ground. Figure is the object or person that is the focus of the visual field, while the ground is the background. As Figure 1 shows, our perception can vary tremendously, depending on what is perceived as figure and what is perceived as ground. Presumably, our ability to interpret sensory information depends on what we label as figure and what we label as ground in any particular case, although this assumption has been called into question (Peterson & Gibson, 1994; Vecera & O’Reilly, 1998).

Figure 1. The concept of figure-ground relationship explains why this image can be perceived either as a vase or as a pair of faces.

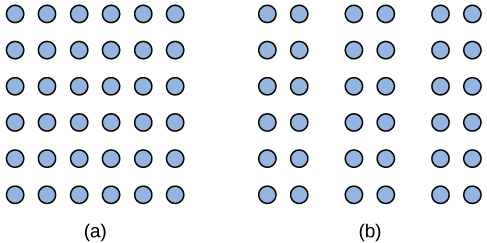

Another Gestalt principle for organizing sensory stimuli into meaningful perception is proximity. This principle asserts that things that are close to one another tend to be grouped together, as Figure 2 illustrates.

Figure 2. The Gestalt principle of proximity suggests that you see (a) one block of dots on the left side and (b) three columns on the right side.

How we read something provides another illustration of the proximity concept. For example, we read this sentence like this, notl iket hiso rt hat. We group the letters of a given word together because there are no spaces between the letters, and we perceive words because there are spaces between each word. Here are some more examples: Cany oum akes enseo ft hiss entence? What doth es e wor dsmea n?

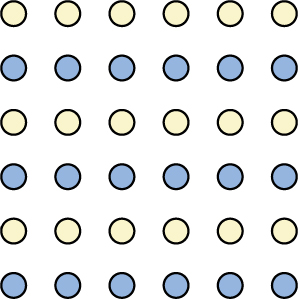

We might also use the principle of similarity to group things in our visual fields. According to this principle, things that are alike tend to be grouped together (Figure 3). For example, when watching a football game, we tend to group individuals based on the colors of their uniforms. When watching an offensive drive, we can get a sense of the two teams simply by grouping along this dimension.

Figure 3. When looking at this array of dots, we likely perceive alternating rows of colors. We are grouping these dots according to the principle of similarity.

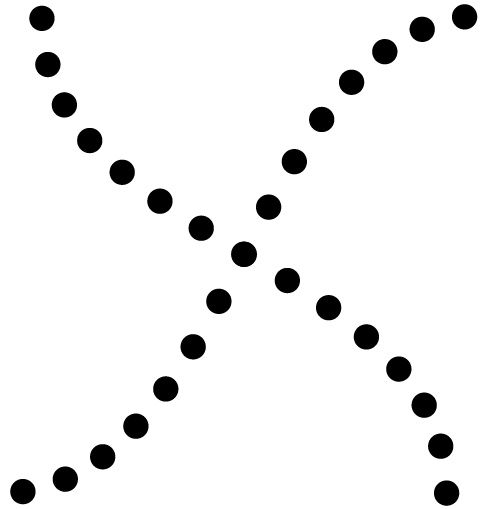

Two additional Gestalt principles are the law of continuity (or good continuation) and closure. The law of continuity suggests that we are more likely to perceive continuous, smooth flowing lines rather than jagged, broken lines (Figure 4). The principle of closure states that we organize our perceptions into complete objects rather than as a series of parts (Figure 5).

Figure 4. Good continuation would suggest that we are more likely to perceive this as two overlapping lines, rather than four lines meeting in the center.

Figure 5. Closure suggests that we will perceive a complete circle and rectangle rather than a series of segments.

Link to Learning

Watch this podcast showing real world illustrations of Gestalt principles.

According to Gestalt theorists, pattern perception, or our ability to discriminate among different figures and shapes, occurs by following the principles described above. You probably feel fairly certain that your perception accurately matches the real world, but this is not always the case. Our perceptions are based on perceptual hypotheses: educated guesses that we make while interpreting sensory information. These hypotheses are informed by a number of factors, including our personalities, experiences, and expectations. We use these hypotheses to generate our perceptual set. For instance, research has demonstrated that those who are given verbal priming produce a biased interpretation of complex ambiguous figures (Goolkasian & Woodbury, 2010).

Dig Deeper: The Depths of Perception: Bias, Prejudice, and Cultural Factors

In this module, you have learned that perception is a complex process. Built from sensations, but influenced by our own experiences, biases, prejudices, and cultures, perceptions can be very different from person to person. Research suggests that implicit racial prejudice and stereotypes affect perception. For instance, several studies have demonstrated that non-Black participants identify weapons faster and are more likely to identify non-weapons as weapons when the image of the weapon is paired with the image of a Black person (Payne, 2001; Payne, Shimizu, & Jacoby, 2005). Furthermore, White individuals’ decisions to shoot an armed target in a video game is made more quickly when the target is Black (Correll, Park, Judd, & Wittenbrink, 2002; Correll, Urland, & Ito, 2006). This research is important, considering the number of very high-profile cases in the last few decades in which young Blacks were killed by people who claimed to believe that the unarmed individuals were armed and/or represented some threat to their personal safety.

Try It

Think It Over

Have you ever listened to a song on the radio and sung along only to find out later that you have been singing the wrong lyrics? Once you found the correct lyrics, did your perception of the song change?

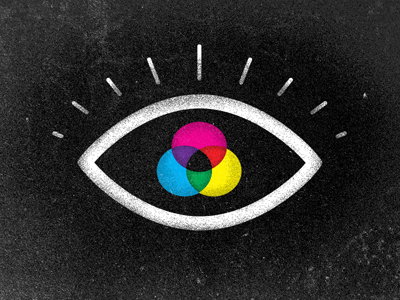

Figure 6. The way we receive the information from the world is called sensation while our interpretation of that information is called perception. [Image: Laurens van Lieshou]

Multi-Modal Perception

Although it has been traditional to study the various senses independently, most of the time, perception operates in the context of information supplied by multiple sensory modalities at the same time. For example, imagine if you witnessed a car collision. You could describe the stimulus generated by this event by considering each of the senses independently; that is, as a set of unimodal stimuli. Your eyes would be stimulated with patterns of light energy bouncing off the cars involved. Your ears would be stimulated with patterns of acoustic energy emanating from the collision. Your nose might even be stimulated by the smell of burning rubber or gasoline. However, all of this information would be relevant to the same thing: your perception of the car collision. Indeed, unless someone was to explicitly ask you to describe your perception in unimodal terms, you would most likely experience the event as a unified bundle of sensations from multiple senses. In other words, your perception would be multimodal. The question is whether the various sources of information involved in this multimodal stimulus are processed separately by the perceptual system or not.

For the last few decades, perceptual research has pointed to the importance of multimodal perception: the effects on the perception of events and objects in the world that are observed when there is information from more than one sensory modality. Most of this research indicates that, at some point in perceptual processing, information from the various sensory modalities is integrated. In other words, the information is combined and treated as a unitary representation of the world.

Behavioral Effects of Multimodal Perception

Although neuroscientists tend to study very simple interactions between neurons, the fact that they’ve found so many crossmodal areas of the cortex seems to hint that the way we experience the world is fundamentally multimodal. Our intuitions about perception are consistent with this; it does not seem as though our perception of events is constrained to the perception of each sensory modality independently. Rather, we perceive a unified world, regardless of the sensory modality through which we perceive it.

It will probably require many more years of research before neuroscientists uncover all the details of the neural machinery involved in this unified experience. In the meantime, experimental psychologists have contributed to our understanding of multimodal perception through investigations of the behavioral effects associated with it. These effects fall into two broad classes. The first class—multimodal phenomena—concerns the binding of inputs from multiple sensory modalities and the effects of this binding on perception. The second class—crossmodal phenomena—concerns the influence of one sensory modality on the perception of another (Spence, Senkowski, & Roder, 2009).

Multimodal Phenomena

Audiovisual Speech

Multimodal phenomena concern stimuli that generate simultaneous (or nearly simultaneous) information in more than one sensory modality. As discussed above, speech is a classic example of this kind of stimulus. When an individual speaks, she generates sound waves that carry meaningful information. If the perceiver is also looking at the speaker, then that perceiver also has access to visual patterns that carry meaningful information. Of course, as anyone who has ever tried to lipread knows, there are limits on how informative visual speech information is. Even so, the visual speech pattern alone is sufficient for very robust speech perception. Most people assume that deaf individuals are much better at lipreading than individuals with normal hearing. It may come as a surprise to learn, however, that some individuals with normal hearing are also remarkably good at lipreading (sometimes called “speechreading”). In fact, there is a wide range of speechreading ability in both normal hearing and deaf populations (Andersson, Lyxell, Rönnberg, & Spens, 2001). However, the reasons for this wide range of performance are not well understood (Auer & Bernstein, 2007; Bernstein, 2006; Bernstein, Auer, & Tucker, 2001; Mohammed et al., 2005).

How does visual information about speech interact with auditory information about speech? One of the earliest investigations of this question examined the accuracy of recognizing spoken words presented in a noisy context, much like in the example above about talking at a crowded party. To study this phenomenon experimentally, some irrelevant noise (“white noise”—which sounds like a radio tuned between stations) was presented to participants. Embedded in the white noise were spoken words, and the participants’ task was to identify the words. There were two conditions: one in which only the auditory component of the words was presented (the “auditory-alone” condition), and one in both the auditory and visual components were presented (the “audiovisual” condition). The noise levels were also varied, so that on some trials, the noise was very loud relative to the loudness of the words, and on other trials, the noise was very soft relative to the words. Sumby and Pollack (1954) found that the accuracy of identifying the spoken words was much higher for the audiovisual condition than it was in the auditory-alone condition. In addition, the pattern of results was consistent with the Principle of Inverse Effectiveness: The advantage gained by audiovisual presentation was highest when the auditory-alone condition performance was lowest (i.e., when the noise was loudest). At these noise levels, the audiovisual advantage was considerable: It was estimated that allowing the participant to see the speaker was equivalent to turning the volume of the noise down by over half. Clearly, the audiovisual advantage can have dramatic effects on behavior.

Another phenomenon using audiovisual speech is a very famous illusion called the “McGurk effect” (named after one of its discoverers). In the classic formulation of the illusion, a movie is recorded of a speaker saying the syllables “gaga.” Another movie is made of the same speaker saying the syllables “baba.” Then, the auditory portion of the “baba” movie is dubbed onto the visual portion of the “gaga” movie. This combined stimulus is presented to participants, who are asked to report what the speaker in the movie said. McGurk and MacDonald (1976) reported that 98 percent of their participants reported hearing the syllable “dada”—which was in neither the visual nor the auditory components of the stimulus. These results indicate that when visual and auditory information about speech is integrated, it can have profound effects on perception.

Try It

Tactile/Visual Interactions in Body Ownership

Not all multisensory integration phenomena concern speech, however. One particularly compelling multisensory illusion involves the integration of tactile and visual information in the perception of body ownership. In the “rubber hand illusion” (Botvinick & Cohen, 1998), an observer is situated so that one of his hands is not visible. A fake rubber hand is placed near the obscured hand, but in a visible location. The experimenter then uses a light paintbrush to simultaneously stroke the obscured hand and the rubber hand in the same locations. For example, if the middle finger of the obscured hand is being brushed, then the middle finger of the rubber hand will also be brushed. This sets up a correspondence between the tactile sensations (coming from the obscured hand) and the visual sensations (of the rubber hand). After a short time (around 10 minutes), participants report feeling as though the rubber hand “belongs” to them; that is, that the rubber hand is a part of their body. This feeling can be so strong that surprising the participant by hitting the rubber hand with a hammer often leads to a reflexive withdrawing of the obscured hand—even though it is in no danger at all. It appears, then, that our awareness of our own bodies may be the result of multisensory integration.

Crossmodal Phenomena

Crossmodal phenomena are distinguished from multimodal phenomena in that they concern the influence one sensory modality has on the perception of another.

Visual Influence on Auditory Localization

Figure 7. Ventriloquists are able to trick us into believing that what we see and what we hear are the same where, in truth, they are not. [Image: Indiapuppet]

A famous (and commonly experienced) crossmodal illusion is referred to as “the ventriloquism effect.” When a ventriloquist appears to make a puppet speak, she fools the listener into thinking that the location of the origin of the speech sounds is at the puppet’s mouth. In other words, instead of localizing the auditory signal (coming from the mouth of a ventriloquist) to the correct place, our perceptual system localizes it incorrectly (to the mouth of the puppet).

Why might this happen? Consider the information available to the observer about the location of the two components of the stimulus: the sounds from the ventriloquist’s mouth and the visual movement of the puppet’s mouth. Whereas it is very obvious where the visual stimulus is coming from (because you can see it), it is much more difficult to pinpoint the location of the sounds. In other words, the very precise visual location of mouth movement apparently overrides the less well-specified location of the auditory information. More generally, it has been found that the location of a wide variety of auditory stimuli can be affected by the simultaneous presentation of a visual stimulus (Vroomen & De Gelder, 2004). In addition, the ventriloquism effect has been demonstrated for objects in motion: The motion of a visual object can influence the perceived direction of motion of a moving sound source (Soto-Faraco, Kingstone, & Spence, 2003).

Auditory Influence on Visual Perception

A related illusion demonstrates the opposite effect: where sounds have an effect on visual perception. In the double-flash illusion, a participant is asked to stare at a central point on a computer monitor. On the extreme edge of the participant’s vision, a white circle is briefly flashed one time. There is also a simultaneous auditory event: either one beep or two beeps in rapid succession. Remarkably, participants report seeing two visual flashes when the flash is accompanied by two beeps; the same stimulus is seen as a single flash in the context of a single beep or no beep (Shams, Kamitani, & Shimojo, 2000). In other words, the number of heard beeps influences the number of seen flashes!

Link to Learning

Participate in the double-flash experiment here.

Take a look at the bouncing balls illusion here.

Another illusion involves the perception of collisions between two circles (called “balls”) moving toward each other and continuing through each other. Such stimuli can be perceived as either two balls moving through each other or as a collision between the two balls that then bounce off each other in opposite directions. Sekuler, Sekuler, and Lau (1997) showed that the presentation of an auditory stimulus at the time of contact between the two balls strongly influenced the perception of a collision event. In this case, the perceived sound influences the interpretation of the ambiguous visual stimulus.

Crossmodal Speech

Several crossmodal phenomena have also been discovered for speech stimuli. These crossmodal speech effects usually show altered perceptual processing of unimodal stimuli (e.g., acoustic patterns) by virtue of prior experience with the alternate unimodal stimulus (e.g., optical patterns). For example, Rosenblum, Miller, and Sanchez (2007) conducted an experiment examining the ability to become familiar with a person’s voice. Their first interesting finding was unimodal: Much like what happens when someone repeatedly hears a person speak, perceivers can become familiar with the “visual voice” of a speaker. That is, they can become familiar with the person’s speaking style simply by seeing that person speak. Even more astounding was their crossmodal finding: Familiarity with this visual information also led to increased recognition of the speaker’s auditory speech, to which participants had never had exposure.

Similarly, it has been shown that when perceivers see a speaking face, they can identify the (auditory-alone) voice of that speaker, and vice versa (Kamachi, Hill, Lander, & Vatikiotis-Bateson, 2003; Lachs & Pisoni, 2004a, 2004b, 2004c; Rosenblum, Smith, Nichols, Lee, & Hale, 2006). In other words, the visual form of a speaker engaged in the act of speaking appears to contain information about what that speaker should sound like. Perhaps more surprisingly, the auditory form of speech seems to contain information about what the speaker should look like.

Think It Over

In the late 17th century, a scientist named William Molyneux asked the famous philosopher John Locke a question relevant to modern studies of multisensory processing. The question was this: Imagine a person who has been blind since birth, and who is able, by virtue of the sense of touch, to identify three dimensional shapes such as spheres or pyramids. Now imagine that this person suddenly receives the ability to see. Would the person, without using the sense of touch, be able to identify those same shapes visually? Can modern research in multimodal perception help answer this question? Why or why not? How do the studies about crossmodal phenomena inform us about the answer to this question?

Glossary

closure: organizing our perceptions into complete objects rather than as a series of parts

crossmodal phenomena: effects that concern the influence of the perception of one sensory modality on the perception of another

double flash illusion: the false perception of two visual flashes when a single flash is accompanied by two auditory beeps

figure-ground relationship: segmenting our visual world into figure and ground

Gestalt psychology: field of psychology based on the idea that the whole is different from the sum of its parts<

good continuation: (also, continuity) we are more likely to perceive continuous, smooth flowing lines rather than jagged, broken lines

integrated: the process by which the perceptual system combines information arising from more than one modality

McGurk effect: an effect in which conflicting visual and auditory components of a speech stimulus result in an illusory percept

multimodal: of or pertaining to multiple sensory modalities

multimodal perception: the effects that concurrent stimulation in more than one sensory modality has on the perception of events and objects in the world

multimodal phenomena: effects that concern the binding of inputs from multiple sensory modalities

pattern perception: ability to discriminate among different figures and shapes

perceptual hypothesis: educated guess used to interpret sensory information

principle of closure: organize perceptions into complete objects rather than as a series of parts

proximity: things that are close to one another tend to be grouped together

rubber hand illusion: the false perception of a fake hand as belonging to a perceiver, due to multimodal sensory information

sensory modalities: a type of sense; for example, vision or audition

similarity: things that are alike tend to be grouped together

unimodal: of or pertaining to a single sensory modality